stateDiagram

i : Input text

state i{

T0 : Text A1

T1 : Text A2

}

r : Hidden layers

state r{

RNN1 : Node1

RNN2 : Node2

RNN3 : Node3

RNN4 : Node4

}

o : Output layers

state o{

o1 : Output layer 1

o2 : Output layer 2

}

T0 --> RNN1

T1 --> RNN1

T0 --> RNN2

T1 --> RNN2

T0 --> RNN3

T1 --> RNN3

T0 --> RNN4

T1 --> RNN4

RNN1 --> o1

RNN2 --> o1

RNN3 --> o1

RNN1 --> o2

RNN2 --> o2

RNN3 --> o2

Media Reporting and Suicide

Natural language processing evaluation and monitoring

Overview

- Project Task

- Project Background

- Suicide

- Suicide reporting and its impact

- Key Technical Skills

- Overview of natural language processing

- Large language model (LLM)

- Instructing LLM

- Chain of thought

Project task

Project task

Does a machine learning model have the potential to evaluate suicide news reports?

- Specific Tasks

- Coding Hong Kong local suicide news reports

- Developing an LLM prompt to examine whether an LLM can evaluate suicide news reporting style

Suicide

Suicide

Global Statistics

- ~800,000 deaths annually (WHO, 2023)

- Suicide is the 4th leading cause of death among 15-29-year-olds

Ideation-to-Action Framework

Klonsky and May (2015) proposed the framework from ideation (suicidal thoughts) to action (behaviors).

Key Risk Factors

- Psychological factors: Depression, Schizophrenia

- Socioeconomic factors: Relationships, access to lethal means

- Biological factors: Cancer

- Psychological factors: Depression, Schizophrenia

Biological perspective of suicide

- Control of Impulsive Behavior

- Associated with the prefrontal cortex (Mann 2002).

- Heritability

- Twin studies show a 0.2~0.45 concordance rate for suicidal behavior (Pedersen and Fiske 2010).

Implications for suicide prevention

From a population-level perspective, interventions targeting the whole community usually focus on the following aspects:

- Means control

- Health promotion

Discussion

Have you seen any suicide prevention efforts in your daily life?

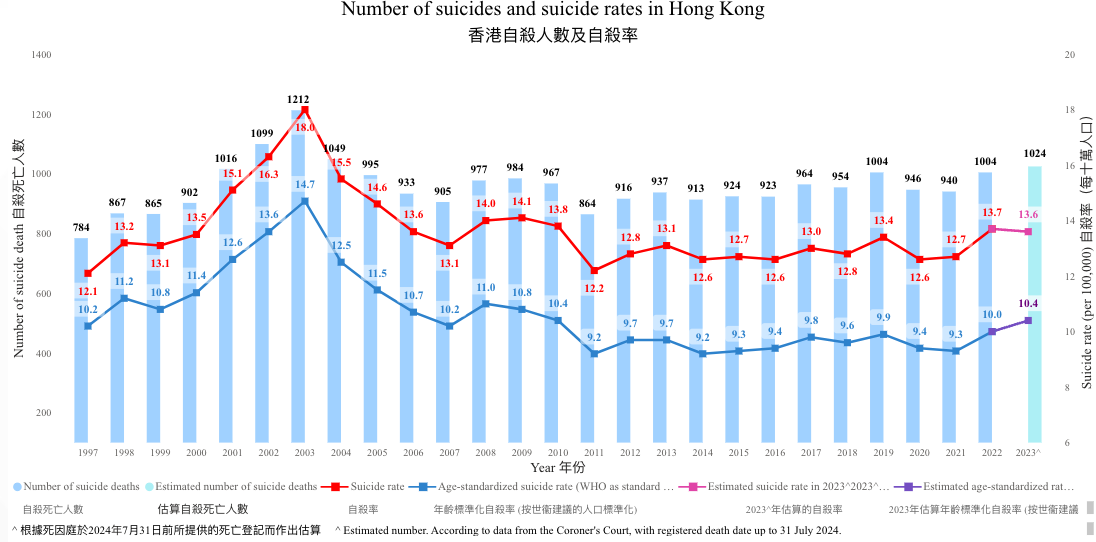

Overview of suicide in Hong Kong

Suicide rate in Hong Kong

Media Reporting on Suicide

Media Impact on suicide

- Arguments on Media Effects

Media reporting can influence suicidal behavior through imitation, protection, or neutrality.

There are arguments about different effects:

- Papageno effect (Positive effect)

- No effect

- Werther effect (Negative effect)

Papageno effect

Definition Media stories of coping, hope, and recovery can reduce suicidal ideation and encourage help-seeking.

Evidence

Counterarguments A systematic review (Sisask and Värnik 2012) does not confirm the effect.

Werther effect

Definition Increased suicides following detailed, sensationalist media reports, especially of celebrities. This promotes imitation via social learning theory.

Evidence

Debating on the Effects

- What is the Effect?

- Can we establish a causal relationship between

media reportingandsuicide? - Individual studies show positive/negative effects, but what then?

- Can we establish a causal relationship between

Discussion

- Have you read any local suicide news?

- What did you feel after reading it?

- What have you learned from the news?

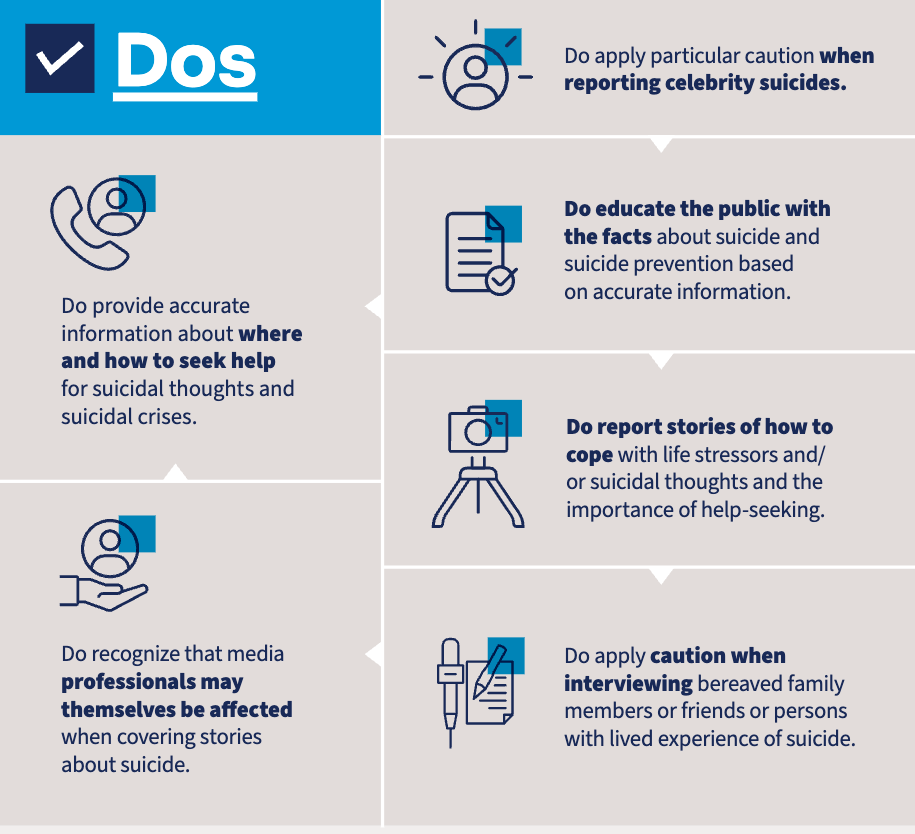

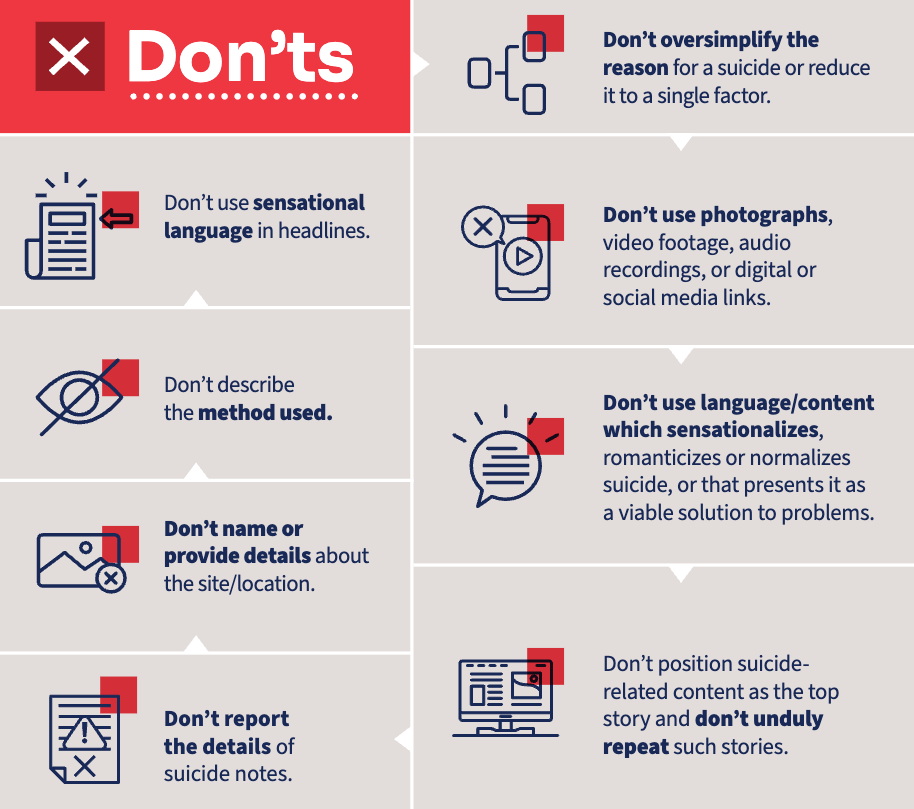

Responsible reporting style

WHO guidelines on ethical reporting

The news media’s impact on suicide depends on how it is reported. A responsible reporting style is needed.

- WHO (2023) developed guidelines for good reporting styles.

Dos

Don’ts

Assessing the Quality of News Reporting

Natural language processing

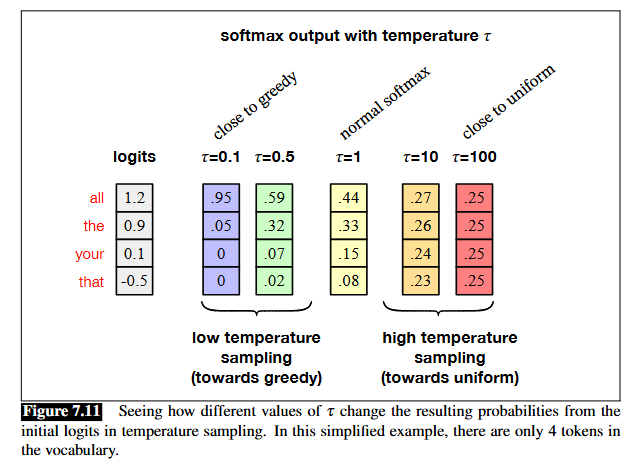

Natural language processing

Definition A field using computers to understand and analyze human languages.

You might also hear the term computational linguistics, which uses quantitative approaches to study human linguistics, and most of the time both terms are interchangeable.

Discussion

If a natural language processing task can fall into a traditional (or even deep learning) scope, then why is it so special that it is usually discussed separately?

Scope of NLP We can still use machine learning taxonomy to categorize tasks:

- Unsupervised learning

- Encoding language

- Ranking/Searching documents

- Supervised learning

- Classification of parts of speech, sentiment, etc.

- Generative models

- Machine translation

- Text-to-speech

- Chatbots

Why modeling text is so different

- Modeling perspective

- Dynamic input shape

- Context awareness

- Training time

- Evaluation

- Data perspective

- Ambiguity

- Dirty data

For the rest of the slides, we will discuss NLP in these three aspects

- Data processing

- Modeling

- Evaluation

Our whole universe was in a regression

We start from a regression setting, and we define the input and outcome pair \((x_i,y_i)\) with total \(n\) observations, where

- \(x_i = [x_{i1}\dots x_{ip}]\) : feature vectors with size \(p\), and

- \(y_i\) : outcome vectors:

- \(y_i \in [0,1] \rightarrow\) Logistic regression, other classification methods

- Sentiment analysis

- Spam detection

- Language Identification

- \(y_i \in \mathbb{R} \rightarrow\) other GLMs

- Product rating

- \(y_i \in [0,1] \rightarrow\) Logistic regression, other classification methods

Fantastic Features and where to find them

An intuitive way is to set the p-th feature of document i as

\[\begin{align*} x_{ip} & = 1 \text{ if the i-th document contains word "XXXX"} \\ & = 0 \text{ if the i-th document does not contain word "XXXX"} \end{align*} \]

There are also other alternatives

\[\begin{align*} x_{ip} \in \mathbb{Z} \text{ How many times that "XXXX" occurs in the document i } \end{align*} \]

Discussion

- What is the implied distribution of \(x_{ip}\)

- What is the limitation of regression approach

Then neural network expansion started. Wait..

The key questions at this moment lies in two aspects

- Understanding the context

- Recurrent neural network (RNN)

- Long-short term memory (LSTM)

- Transformer

- LLM

- Better feature engineering

- Autoencoder

- Multi-layer perceptron(MLP)

- convolution neural network (CNN)

Multi-layer perceptron(MLP)

Adjustment of input

Treat text as a time-series \[ \begin{align} & x_{ijp} = 1 \text{ if the j-th words in i-th } \\ & \text{document contains word in index p} \end{align} \]

We need to create a dictionary index \(\{p:"XXX"\}\)

RNN and LSTM

- What did MLP and CNN not achieve

- Context

- Amount of parameter

- Solution

- Asking nodes to memorizing context

- Using this Memory as an additional input

stateDiagram

i : Input text

state i{

T0 : Text A1

T1 : Text A2

T2 : Text A3

}

r : Hidden layers

state r{

RNN1 : Node1

RNN2 : Node2

RNN3 : Node3

}

o : Output layers

state o{

o1 : Output layer 1

o2 : Output layer 2

}

T0 --> RNN1

T1 --> RNN2

T2 --> RNN3

RNN1 --> o1

RNN2 --> o1

RNN3 --> o1

RNN1 --> o2

RNN2 --> o2

RNN3 --> o2

RNN1 --> RNN2

RNN2 --> RNN3

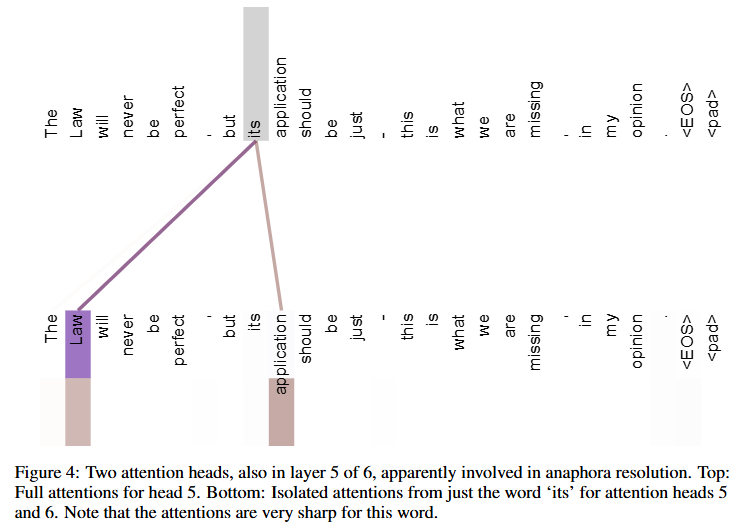

Attention, transformer and LLM

- Attention mechanism introduced in Vaswani et al. (2017) Attention is All You Need, which uses self-attention mechanisms to process input data efficiently.

- Instead of memorizing previous state, attention mechanism dynamically calculates the importance of each input based on the context.

stateDiagram

i : Input text

state i{

T0 : Text A1

T1 : Text A2

T2 : Text A3

}

e : Embedding

state e {

Query1 : Query A1

Key1 : Key A1

Key2 : Key A2

Key3 : Key A3

Value1 : Value A1

Value2 : Value A2

Value3 : Value A3

}

w : Attention Weight

state w {

weight1 : Weight A1

weight2 : Weight A2

weight3 : Weight A3

}

T0 --> Query1

T0 --> Key1

T0 --> Value1

T1 --> Key2

T1 --> Value2

T2 --> Key3

T2 --> Value3

Query1 --> weight1

Key1 --> weight1

Query1 --> weight2

Key2 --> weight2

Query1 --> weight3

Key3 --> weight3

weight1 --> output

Value1 --> output

weight2 --> output

Value2 --> output

weight3 --> output

Value3 --> output

All I need is data

Training an NLP model requires a huge amount of data. For example, OpenAI revealed (Brown et al. 2020) that the majority of the training data sources are English data from:

- Web pages

- Social media and forum posts

- Books

- Wikipedia

- User feedback (for GPT-4 and onwards)

Discussion

What are the implications of these data sources in terms of

- Performance

- Privacy

Pre-processing data

At the first step of data analysis, pre-processing is always the key to quality model and better performance.

- Cleaning data

- Pre-processing issue

- Encoding text into vector space

- Tokenization

- Embedding

- Labeling data

Cleaning data

- Mannual typo, non-text elements

- Stopwords

- Function word (e.g. articles, pronouns, conjunctions, …)

- Overly used verb

- Declension (Tense, gender, plural)

Discussion

- Why can LLM accept our typos?

- If LLM can comprehend typos, then do I need to clean data?

Tokenizing text

Tokenize means breaking down sentences to small pieces of words

A straight forward way is word tokenization

New public housing with enlarged toilets and kitchens for the convenience of wheelchair users.

新公屋廁廚加大便利輪椅戶

- Sentence segmentation

- English: 14 tokens

- rule-based could work nearly perfect

- Chinese: ?? tokens

- Dynamic programming approach

- Deep-learning approach

- English: 14 tokens

Subword Tokenization

Encoding based on the word are potentially prone to

- Mis-spelling

- Hard to cater to grammatical variations

Subword Tokenization Breaking word into some smaller subwords, in addition to address above issue

- Higher chance to guess out of dictionary terms

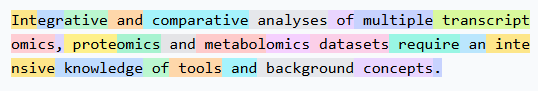

Embedding

Count-based embedding

- Sentence level: we create a vocabulary (actually token) corpus and create an adjacency matrix \(|V|\times |V|\) where

- Each element \(v_{ij}\) means how many times word \(i\) and \(j\) is next to each other (1-gram)

Word2vec

Another brutal way is let the machine embedding themselves through self-supervise learning and auto-encoder.

- Input: Sentence + word

- Output: Word next to them within 2 words

Discussion

It is obvious that these tokens were represented in high dimension and sparse format. What are the potential implication if we directly used these embedding for analysis?

Large language model

Why or Why not LLM

Discussion

- If doing a data science job on language is so troublesome, why not just throw away those old fashioned methods and embrace LLM?

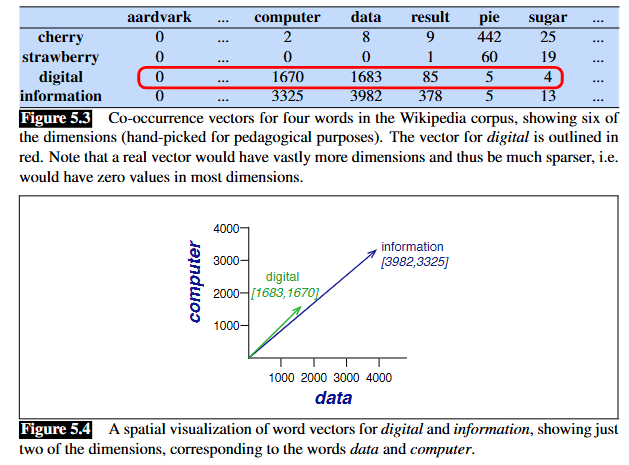

Techniques of customizing LLM

Count-based embedding

- Prompting

- Instructing LLM the task

- Retrieval-Augmented Generation (RAG)

- Giving external reference

- Fine-tuning

- Retraining model

Consideration of different customization

Budget

![GPT-5 quotation price at 2025-09-01]()

Scalability Does the model need to be very generalizable

- Small, precise model v.s. large generalizable model

Prompt engineering

- Structured Prompts

- Chain-of-Thought (CoT)

- Few-Shot

- Provide a few examples of compliant/non-compliant news.

- Divide and Conquer

Structured prompt

Overall, structured instruction provides steadier and more reliable outcomes

Structured prompt

Role

Task

Context

Format

Few-shot learning

Examples

Xu et al. (2024) proposed a structured prompt to address prediction tasks in mental health-related topics using LLMs:

\[ \begin{aligned} \text{Prompt} = \text{Analyzed text} + \\ \text{Outcome and context} + \text{Question} + \\\text{Constraints} \end{aligned} \]

Nori et al. (2024) argues that few-shot learning does not always get better results

Discussion

- Why does

- structured prompt

- few-shot learning

Chain of thought (Wei et al. 2022)

Idea Provide illustration of how you solve the problem in the prompt to let LLM understand how to tackle the problem

Prompt without CoT

Jane was born on the last day of February in 2020. Today is her 15-year-old birthday in the common law. What is the date tomorrow in MM/DD/YYYY?

Prompt with CoT

Example question: Jane was born on the last day of February in 2020. Today is her 15-year-old birthday in the common law. What is the date tomorrow in MM/DD/YYYY? Example Answer: In common law practice, for leap year babies the legal birthday in common years is March 1st, and 2020+15=2035. So today is 03/01/2035 and tomorrow is 03/02/2035 Please answer the following question: Question: Ray was born on the last day of February in 2016. Today is his 15-year-old birthday in the common law. What is the date tomorrow in MM/DD/YYYY?

| Method | gpt-4o-mini (version:2024-07-18) | Grok3 | Grok 4 | Standard answer |

|---|---|---|---|---|

| W/ CoT | 03/01/2035 | 09/03/2025 | 03/02/2035 | 03/02/2035 |

| CoT | 03/02/2031 | 03/02/2031 | 03/02/2031 | 03/02/2031 |

How does text being generated from LLM

- Greedy decoding - local optimal strategy

Choose the highest token (\(w_t\)) given input \(\textbf{w}_{<t}\) (the prompt and your previous output)

\[ \hat{w_t} = \arg\max_{w \in V} P(w | \textbf{w}_{<t}) \]

- Random sampling - probabilistic approach

Instead of returning optimal solution, we allow the flexibility of generation through probability sampling.

\[ w_{t+1} \sim P(w_{t+1} | \textbf{w}_{<t}) \]

Discussion

Neither greedy decoding nor random sampling was used in LLM, what are the disadvantages of these algorithms

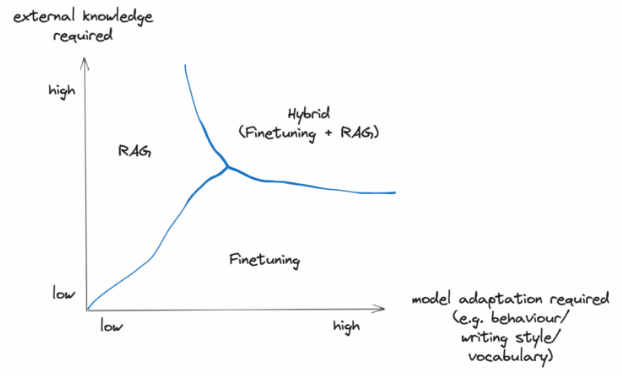

Temperature sampling

- Adjusting the chance of being selected in the random sampling.

Recalling \(w \sim \text{Mult}(1,p)\)

Therefore, we can using a bayesian to influence this distribution.

- Some thoughts

- Uniform dirichlet prior => It will be normalized at the end

- Informative prior => how to generate informative prior?

Solution: Exponentially scaling the logit with factor \(\frac{1}{\tau}\)

Top-p and Top-K sampling

Top-K

Random sampling based on the first \(K\) tokens

- Identifying the \(K\) highest probability tokens

- Drawing samples from these K tokens proportional to their probability

Top-p

Random sampling based on the cumulative distribution

- Identifying the highest \(n\) probability tokens that their cumulative probability is less than \(p\)

- Drawing samples from these \(n\) tokens proportional to their probability

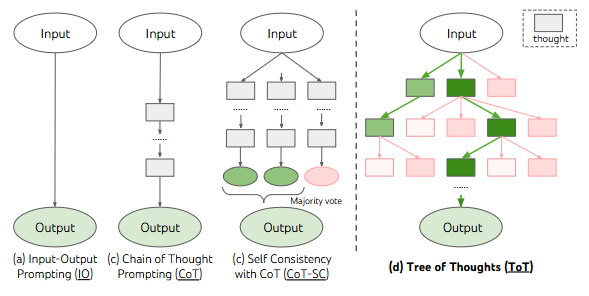

Self-consistency CoT and Tree of Thought (ToT)

Self-consistency CoT Wang et al. (2022) Random forest of LLM

- Generate more possible outcomes

- Majority voting of the results

Tree of Thought Yao et al. (2023) Decision tree

- LLM decomposes the task into several strategies and develops strategies

- Evaluating the output whether it has answered the original question

Evaluation and implementation

Evaluating NLP model performance

Evaluation of NLP largely depends on the task itself. The overall idea is modifying the problem back to MLE/classification-like evaluation

Commonly seen indicators

- Classification and regression task

- Traditional metrics

- Machine translation

- Bilingual evaluation understudy (BLEU) score

- Generation

- Perplexity

- Summarization

- ROUGE-N

Perplexity

Idea: Model \(\theta\) has the highest probability to get the (i.e. \(\arg\max_{\theta} p_{\theta}(w_{1:n})\))

Issue: MLE approach subject to the length of a text, and in favor of short text

Modification: Perplexity function (Geometric mean of the probability)

\[ \text{Perplexity}_{\theta}(w_{1:n})=\Biggl(\prod_{i=1}^n\frac{1}{P_{\theta}(w_i|w_{<i})}\Biggr)^{(1/n)} \]

Implementing NLP task

Discussion

What are the consideration of selecting model to address your NLP task?

- Output complexity

- Classification, regression problem

- Generation tasks

- Input complexity

- Scope of the input text

- Domain knowledge involved

- Data availability

- Amount of available data

- Cost

- Financial feasibility

- Time feasibility

Ethical and Safety Issues

Bias

Data source is the major contributor of the model bias. The model gets the inference based on your input text, which could convey social and cultural bias.

Hallucination

It is prominent in LLM that it generates text based on a probabilistic way instead of facts and references. It can create new things that do not exist at all.

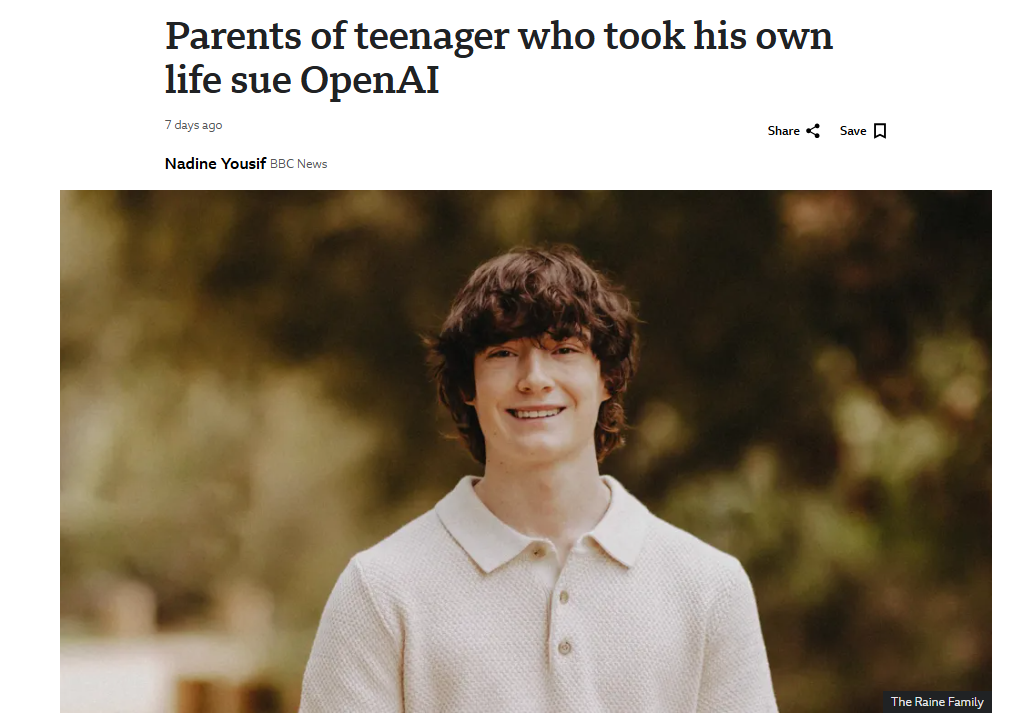

Safety use

Sensitive content, violence, sex, death, could occur if you intentionally instruct them to do so. Safely using these tools is critical